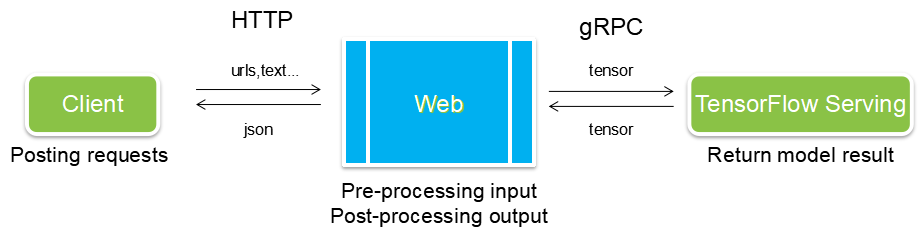

maven docker 部署到多台机器上。。_TensorFlow Serving + Docker + Tornado机器学习模型生产级快速部署_weixin_39746552的博客-CSDN博客

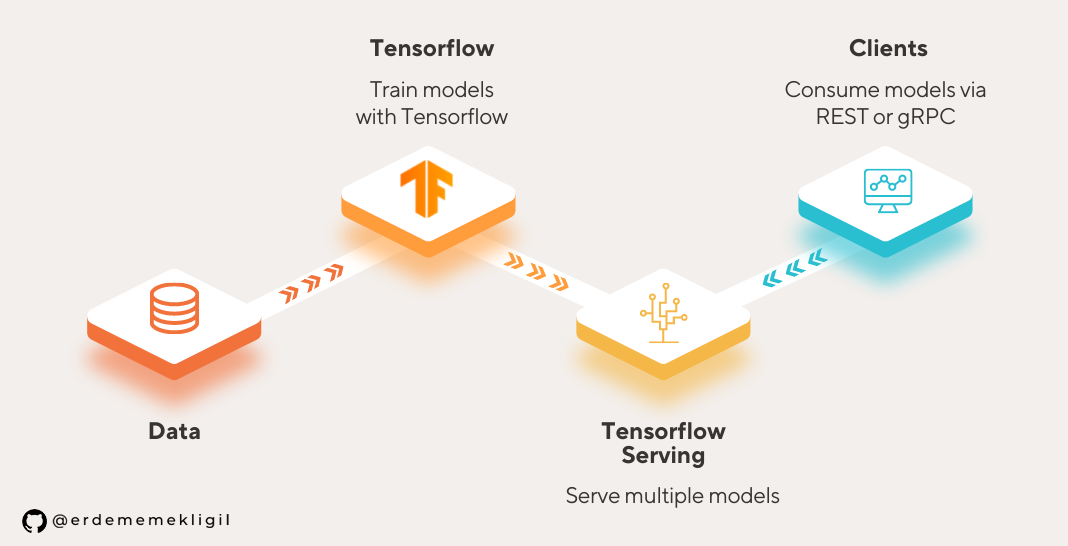

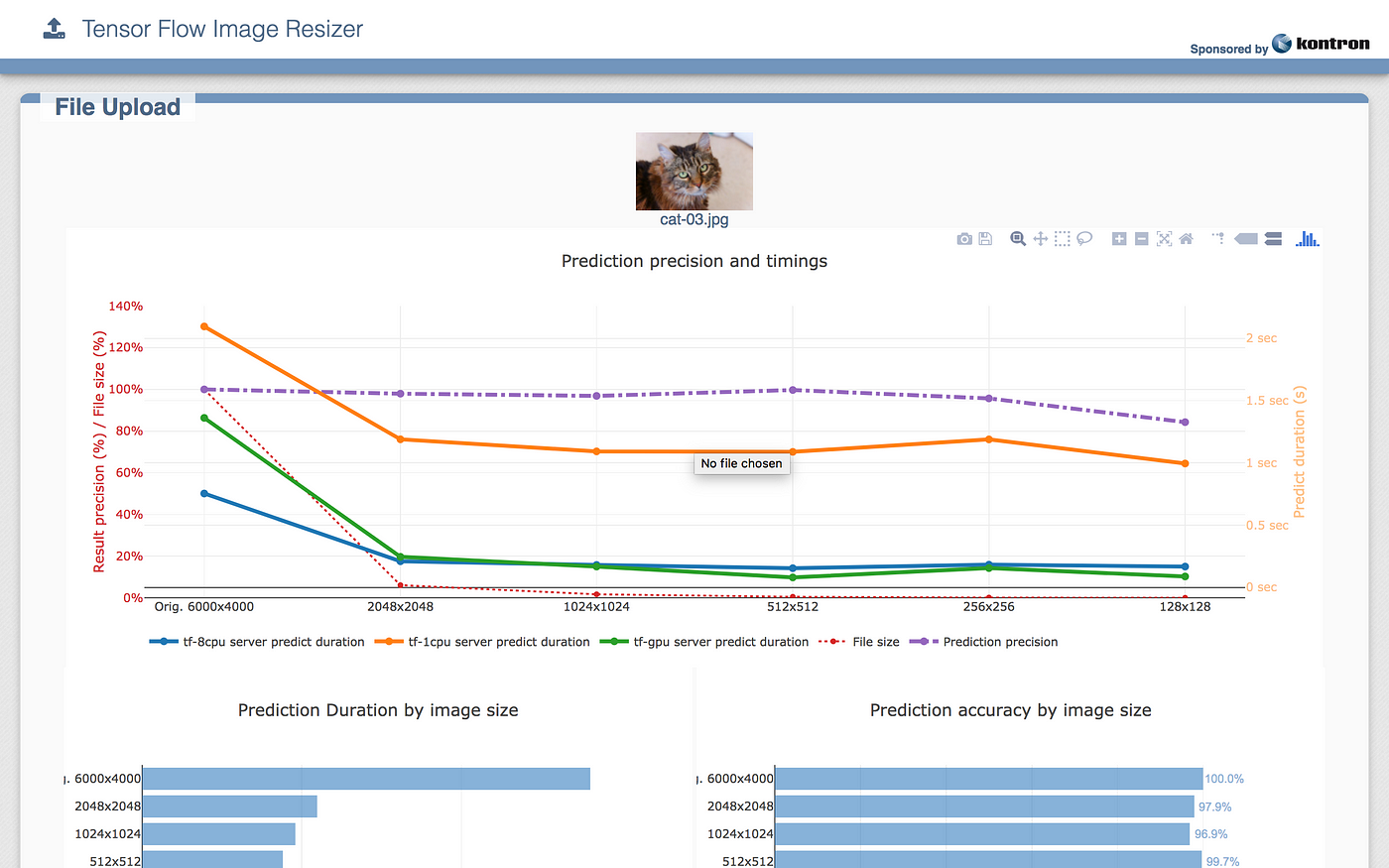

Serving an Image Classification Model with Tensorflow Serving | by Erdem Emekligil | Level Up Coding

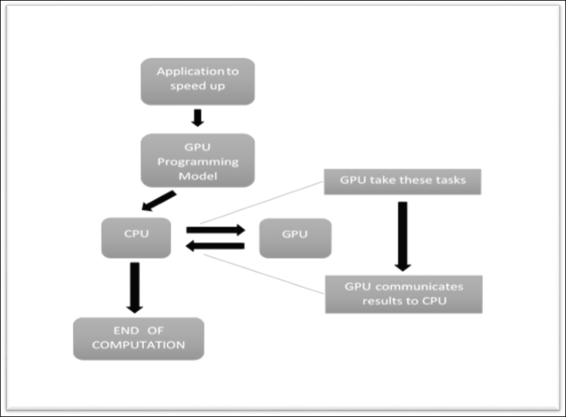

Is there a way to verify Tensorflow Serving is using GPUs on a GPU instance? · Issue #345 · tensorflow/serving · GitHub

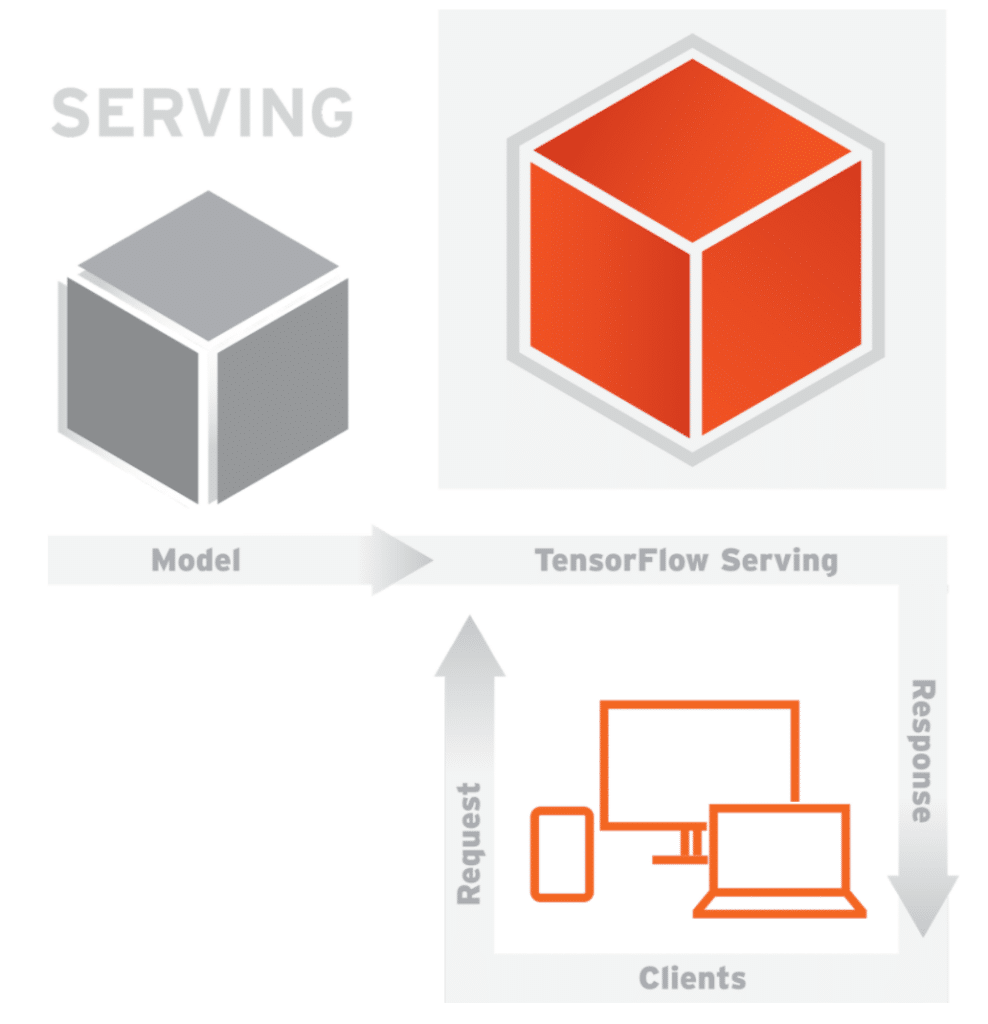

Tensorflow Serving with Docker. How to deploy ML models to production. | by Vijay Gupta | Towards Data Science

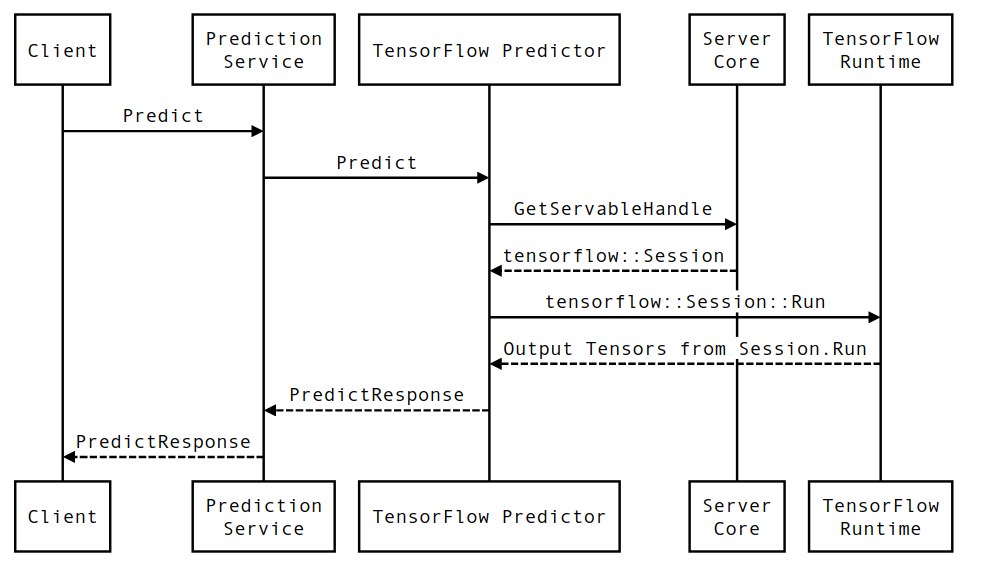

![PDF] TensorFlow-Serving: Flexible, High-Performance ML Serving | Semantic Scholar PDF] TensorFlow-Serving: Flexible, High-Performance ML Serving | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/cff81b22995937e1bf9533c800b24209932e402a/3-Figure1-1.png)

![PDF] TensorFlow-Serving: Flexible, High-Performance ML Serving | Semantic Scholar PDF] TensorFlow-Serving: Flexible, High-Performance ML Serving | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/cff81b22995937e1bf9533c800b24209932e402a/5-Figure2-1.png)